Data Scientific Intuition that defines Good vs. Bad scientists

입력

수정

Many amateur data scientists have little respect to math/stat behind all computational models

Math/stat contains the modelers' logic and intuition to real world data

Good data scientists are ones with excellent intuition

On SIAI's website, we can see most wannabe students go to MSc AI/Data Science program intro page and almost never visit MBA AI program pages. We have a shorter track for MSc that requires extensive pre-study, and much longer version that covers missing pre-studies. Over 90% of wannabes just take a quick scan on the shorter version and walk away. Less than 10% to the longer version, and almost nobody to the AI MBA.

We get that they are 'wannabe' data scientists with passion, motivation, and dream with self-confidence that they are the top 1%. But the reality is harsh. So far, less than 5% applicants have been able to pass the admission exam to MSc AI/Data Science's longer version. Almost never we have applicants who are ready to do the shorter one. Most, in fact, almost all students should compromise their dream and accept the reality. The fact that the admision exam is the first two courses of the AI MBA, lowest tier program, already bring students to senses that over a half of applicants usually disappear before and after the exam. Some students choose to retake the exam in the following year, but mostly end up with the same score. Then, they either criticize the school in very creative ways or walk away with frustrated faces. I am sorry for keeping such high integrity of the school.

Data Scientific Intuition that matters the most

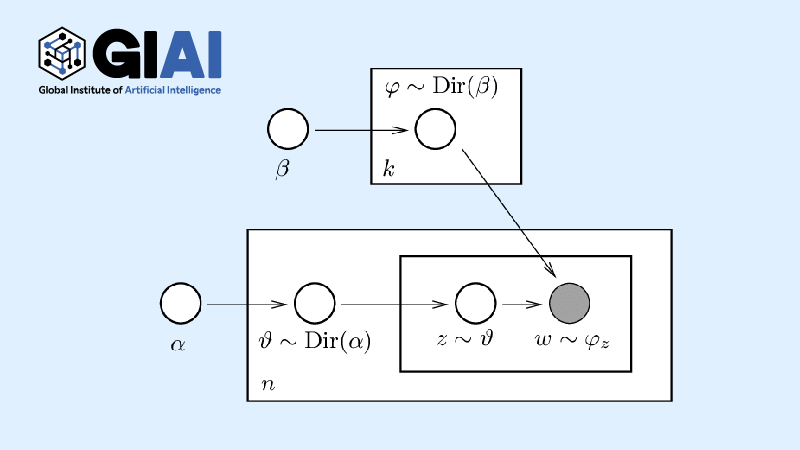

The school focuses on two things in its education. First, we want students to understand the thought processes of data science modelers. Support Vector Machine (SVM), for example, reflects the idea that fitting can be more generalized if a separating hyperplane is bounded with inequalities, instead of fixed conditions. If one can understand that the hyperplane itself is already a generalization, it can be much easier to see through why SVM was introduced as an alternative to linear form fitting and what are the applicable cases in real life data science exercises. The very nature of this process is embedded in the school's motto, 'Rerum Cognoscere Causas' ((Felix, qui potuit rerum cognoscere causas - Wikipedia)), meaning a person pursuing the fundamental causes.

The second focus of the school is to help students where and how to apply data science tools to solve real life puzzles. We call this process as the building data scientific instuition. Often, math equations in the textbooks and code lines in one's program console screens do not have any meaning, unless it is combined in a way to solve a particular problem in a peculiar context with a specific object. Unlike many amateur data scientists' belief, coding libraries have not democratized data science to untrained students. In fact, the codes copied by the amateurs are evident examples of rookie failures that data science tools need must deeper background knowledge in statistics than simple code libraries.

Our admission exam is designed to weed out the dreamers or amateurs. After years of trials and errors, we have decided to give a full lecture of elementary math/stat course to all applicants so that we can not only offer them a fair chance but also give them a warning as realistic as our coursework. Previous schooling from other schools may help them, but the exam help us to see if one has potential to develop 'Rerum Cognoscere Causas' and data scientific intuition.

Intution does not come from hard study alone

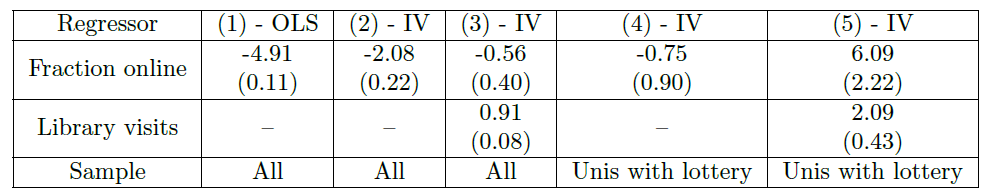

When I first raised my voice for the importance of data scientific intution, I had had severe conflicts with amateur engineers. They thought copying one's code lines from a class (or a github page) and applying it to other places will make them as good as high paid data scientists. They thought these are nothing more than programming for websites, apps, and/or any other basic programming exercises. These amateurs never understand why you need to do 2nd-stage-least-square (2SLS) regression to remove measurement error effects for a particular data set in a specific time range, just as an example. They just load data from SQL server, add it to code library, and change input variables, time ranges, and computer resources, hoping that one combination out of many can help them to find what their bosses want (or what they can claim they did something cool). Without understanding the nature of data process, which we call 'data generating process' (DGP), their trials and errors are nothing more than higher correlation hunting like untrained sociologists do in their junk researches.

Instead of blaming one code library worse performing than other ones, true data scientists look for embedded DGP and try to build a model following intuitive logic. Every step of the model requires concreate arguments reflecting how the data was constructed and sometimes require data cleaning by variable re-structuring, carving out endogeneity with 2SLS, and/or countless model revisions.

It has been witnessed by years of education that we can help students to memorize all the necessary steps for each textbook case, but not that many students were able to extend the understanding to ones own research. In fact, the potential is well visible in the admission exam or in the early stage of the coursework. Promising students always ask why and what if. Why SVM's functional shape has $1/C$ which may limit the range of $C$ in his/her model, and what if his/her data sets with zero truncation ends up with close to 0 separating hyperplane? Once the student can see how to match equations with real cases, they can upgrade imaginative thought processes to model building logic. For other students, I am sorry but I cannot recall successful students without that ability. High grades in simple memory tests can convince us that they study hard, but lack of intuition make them no better than a textbook. With the experience, we design all our exams to measure how intuitive students are.

Intuition that frees a data scientist

In my Machine Learning class for tree models, I always emphasize that a variable with multiple disconnected effective ranges in trees has a different spanned space from linear/non-linear regressions. One variable that is important in a tree space, for example, may not display strong tendency in linear vector spaces. A drug that is only effective to certain age/gender groups (say 5~15, 60~ for male, 20~45 female) can be a good example. Linear regression hardly will capture the same efffective range. After the class, most students understand that relying on Variable Importances of tree models may conflict with p-value type variable selections in regression-based models. But only students with intuition find a way to combine both models that they find the effective range of variables from the tree and redesign the regression model with 0/1 signal variables to separate the effective range.

The extend of these types of thought process is hardly visible from ordinary and disqualified students. Ordinary ones may have capacity to discern what is good, but they often have hard time to apply new findings to one's own. Disqualified students do not even see why that was a neat trick to the better exploitation of DGP.

What's surprising is that previous math/stat education mattered the least. It was more about how logical they are, how hard-working they are, and how intuitive they are. Many students come with the first two, but hardly the third. We help them to build the third muscle, while strenghtening the first. (No one but you can help the second.)

The re-trying students ending up with the same grades in the admission exam are largely because they fail to embody the intuition. It may take years to develop the third muscle. Some students are smart enough to see the value of intuition almost right away. Others may never find that. For failing students, as much as we feel sorry for them, we think that their undergraduate education did not help them to build the muscle, and they were unable to build it by themselves.

The less chanllenging tier programs are designed in a way to help the unlucky ones, if they want to make up the missing pieces from their undergraduate coursework. Blue pills only make you live in fake reality. We just hope our red pill to help you find the bitter but rewarding reality.